Can I learn the node Settings of Korean 720 degree sphere screen?

Yes =)

You can start with node basics in our manual:

If you have a specific question, please ask

I already know the basic node operation steps. I want to learn the 720 degree global node setting in South Korea. Can you give me an example

Right, this is about sphere projection we did in 2017 in South Korea with Metaspace:

I can’t share the project or explaing how we did it in details.

It was quite experimental at that time with Screenberry version 2.7 on the edge of it’s capabilities.

We did a full sphere screen calibration with help of 4 cameras with fisheye lenses located on the bridge.

First stage is to calibrate 4 cameras to produce “one image”, and then use our calibartion algorithm to calibrate the projectors with black and white maps.

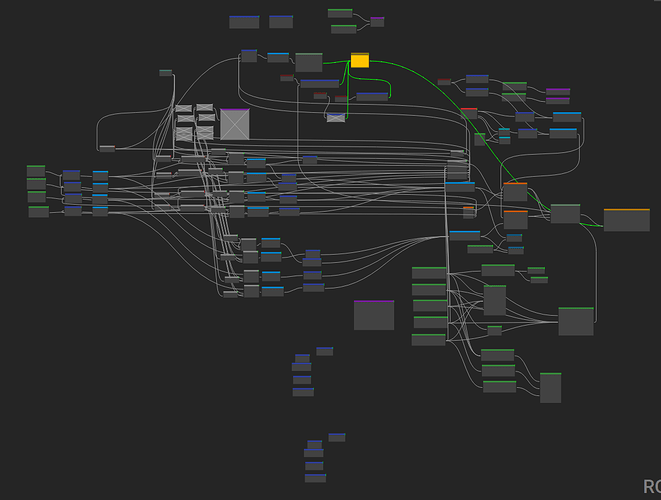

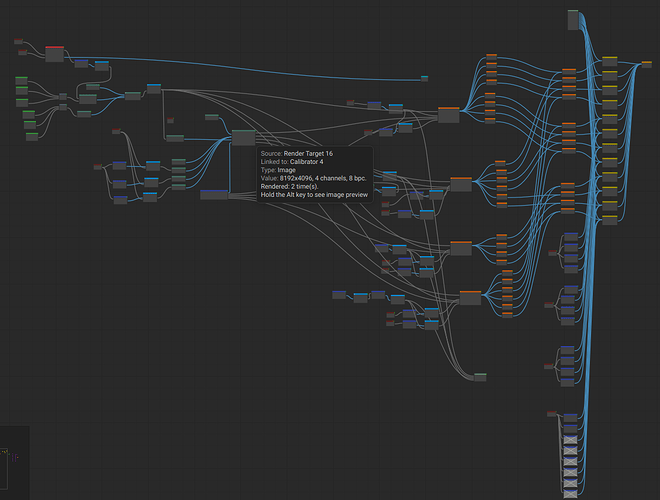

The node graph was complicated and had too much manual work to align images from cameras beforehand.

We now moved on to new idea: each camera calibrates a part of the screen. Then calibrated parts can be aligned together with a single camera calibration, if possible, or with manual alignment via 3D Scene remapping or simply with 2D patches.

We did this with Hive on Frameless project in 2022.

Old nodes used for that Sphere 360 calibration was removed in version 3.1.

Multi camera calibration is still in experimental stage in Screenberry 3.2, and we usually do all the configuration on site or remotely. If you have a project, please contact us with the details and we help you with the node graph configuration.

We plan to improve multi camera calibration for next Screenberry version 3.3 this spring.

Looking forward to the success of automatic fusion of multiple cameras so that we can easily debug,

Can you provide some ideas on how to achieve this with Screenberry 3.2?

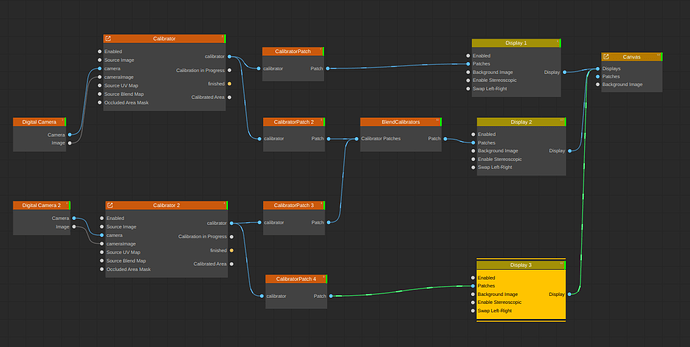

For example, if you want to calibrate 3 projectors panorama with 2 cameras.

First camera calibrates 2 left projectors. Second camera calibrates middle and right projector. So both cameras calibrate middle projector. Use Blend Calibrators node for projectors that you want to calibrate from both cameras.

You start by calibrating both calibrators. Now you need to calibrate two calibrated areas.

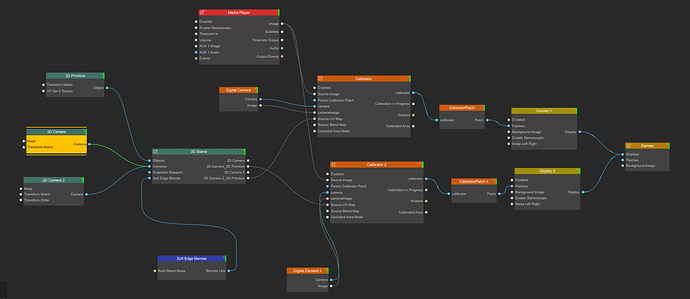

One way is to have temporal camera that can see whole screen and calibrate once using 2 calibrated areas as projectors:

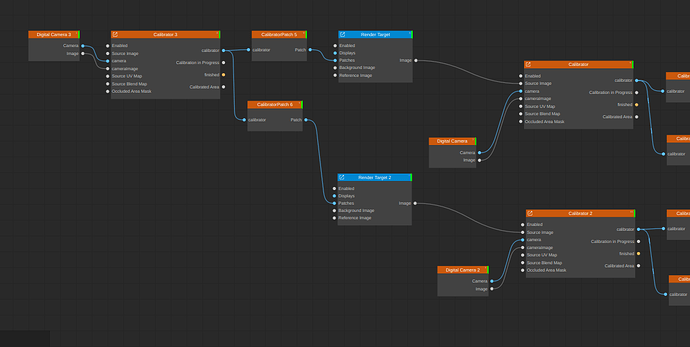

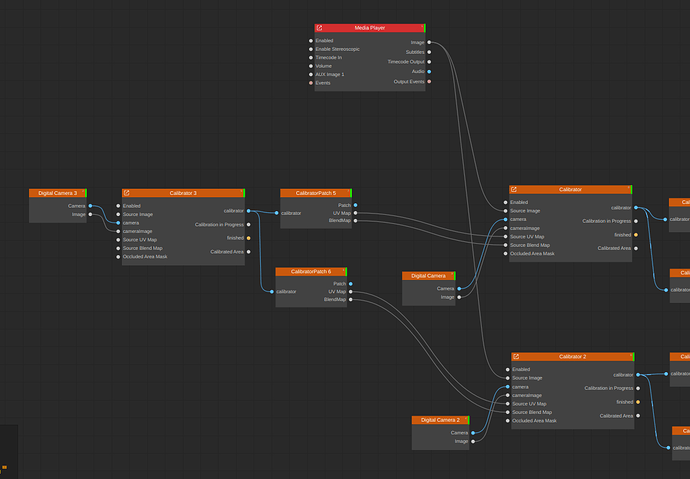

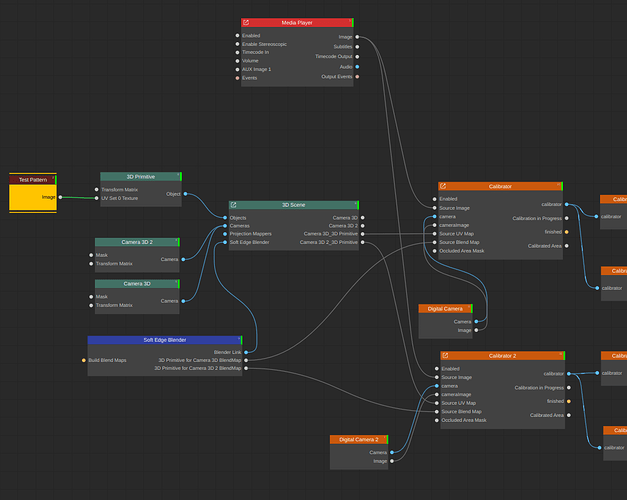

After calibration has finished - we connect uv map and blend map from 3rd calibrator patches to first 2 calibrators as source UV map and source Blend map, and connect one content source (Media Player) to those two calibrators:

Now you can remove 3rd temporal camera and use only first 2 fixed cameras for recalibration in the future. The transformation between first 2 cameras will remain unchanged.

Another way of calibrating 2 calibrators is to use 3D Scene remapping. You use UV maps from 3D Scene node and blending maps from Soft Edge Blender node:

Hi, I tried to test with 3D Scene, and it was successfully divided into 2 hemispheres, but one image was displayed normally and the other was compressed. Is my setting incorrect?

Show me your setup. Maybe you can attach your project file (SBR).

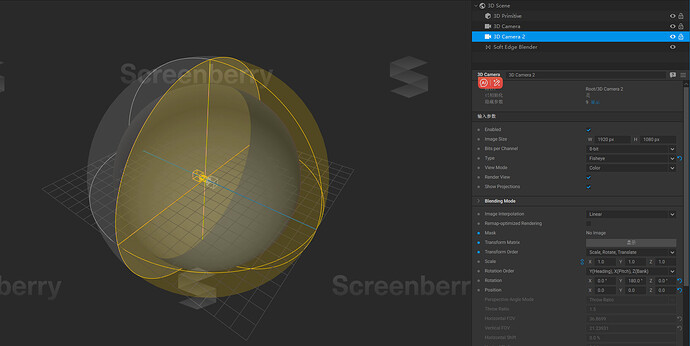

Add 3D Primitive, select type = Sphere.

Add projection mapper and two 3D cameras.

Set Projector and Camera positions to 0, 0, 0

Configure projector to spherical type, configure 2 cameras to fisheye types.

Select view mode for cameras to be “None (Only projectionrs)”

Rotate fisheye cameras as you need. Should work.

Another way to do the same thing is to use UV map input of Sphere primitive. Instead of using projector - just connect you latlong input to primitive UV input and configure cameras view mode to Texture

Source UV Map and Blending Map are used for multi camera calibration. I’m not quite getting what you’re trying to achieve with this setup?

If you’re trying to calibrate a sphere, that’s great. We just finished calibration for a sphere in South Korea. It is 12 projector setup with 4-camera calibration. 4 cameras are located at the center of the bridge looking at 4 directions with 90 degrees offset each. The calibration process is semi-automatic and quite complicated. If you have a project - pleas contact us for a support.

We’re planing to wrap this all into some kind of sphere calibration widget to simplify the process.

1.My idea is to project the UV map onto the 2 hemispheres, and then use the camera to geometrically correct the 2 hemispheres separately.

2.I need to build a small scene to show to clients and promote such projects in the future.